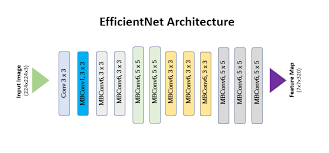

EfficientNet-TensorRT EfficientNet-TensorRT

Environment:Ubuntu20.04 、CUDA>11.0 、cudnn>8.2 、cmake >3.1 、TensorRT>8.0

环境:Ubuntu20.04 、CUDA>11.0 、cudnn>8.2 、cmake >3.1 、TensorRT>8.0

TensorRT building model reasoning generally has three ways:

TensorRT构建模型推理一般有三种方式:

(1) use the TensorRT interface provided by the framework, such as TF-TRT and Torch TRT;

(1)使用框架提供的TensorRT接口,如TF-TRT、Torch TRT;

(2) Use Parser front-end interpreter, such as TF/Torch/... ->ONNX ->TensorRT;

(2)使用Parser前端解释器,如TF/Torch/... ->ONNX ->TensorRT;

(3) Use TensorRT native API to build the network.

(3) 使用TensorRT原生API构建网络。

Of course, the difficulty and ease of use must be from low to high, and the accompanying performance and compatibility are also from low to high. Here we will introduce the third method directly.

当然,难度和易用性肯定是从低到高,附带的性能和兼容性也是从低到高。这里我们直接介绍第三种方法。

Construction phase 施工阶段

Logger、Builder、BuilderConfig、Network、SerializedNetwork

Logger、Builder、BuilderConfig、网络、SerializedNetwork

Run Time 运行

Engine、Context、Buffer correlation、Execute

引擎、上下文、缓冲区关联、执行

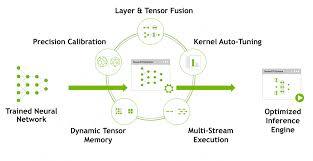

what TensorRT have did?

TensorRT做了什么?

TensorRT deeply optimizes the operation efficiency of reasoning

TensorRT深度优化推理运行效率

Automatically select the optimal kernel

自动选择最优内核

There are multiple CUDA implementations for matrix multiplication and convolution, and the optimal implementation is automatically selected according to the size and shape of the data

矩阵乘法和卷积有多种CUDA实现,根据数据的大小和形状自动选择最佳实现

Calculation chart optimization

计算图表优化

Generate network optimization calculation diagram by means of kernel integration and reducing data copy

通过内核集成,减少数据拷贝,生成网络优化计算图

Support fp16/int8 支持fp16/int8

Precision conversion and scaling of numerical values, making full use of hardware's low precision and high throughput computing capabilities

数值的精确转换和缩放,充分利用硬件的低精度和高吞吐量的计算能力

How to build?? 怎么搭建??

Warning ! some path you have to change:

警告 !你必须改变一些路径:

CMakeLists.txt CMakeLists.txt

# tensorrt

include_directories(xxx/TensorRT-8.4.3.1/include/)

link_directories(xxx/TensorRT-8.4.3.1/lib/)

efficientnet.cpp 高效网.cpp

///line40:define your model global params

static std::map

global_params_map = {

// input_h,input_w,num_classes,batch_norm_epsilon,

// width_coefficient,depth_coefficient,depth_divisor, min_depth

{"b3_flower", GlobalParams{300, 300, 17, 0.001, 1.2, 1.4, 8, -1}},

}; //add your own efficientnet train params

///line274: your onw model wts and name

std::string wtsPath = "/home/zjd/clion_workspace/efficientnet_b3_flowers.wts";

std::string engine_name = "efficientnet_b3_flowers.engine";

std::string backbone = "b3_flower";

///line327: input image

cv::Mat img = cv::imread("/home/zjd/clion_workspace/OxFlower17/test/17/1291.jpg", 1);

///line370: maybe a json file to save the class labels

string json_fileName("/home/zjd/tensorrtx/efficientnet/flowers_labels.txt");

Then make build ! ! !

然后进行构建! ! !

cd ./EfficientNet-TensorRT

mkdir build

cd build

cmake ..

make

./efficientnet #filrst build engine

[output]:build finisd

./efficientnet #second run inference

[output]:Class label:xxxx

点击空白处退出提示

评论